|

Full list of publications. Also available on Google Scholar. |

| 2026 |

|

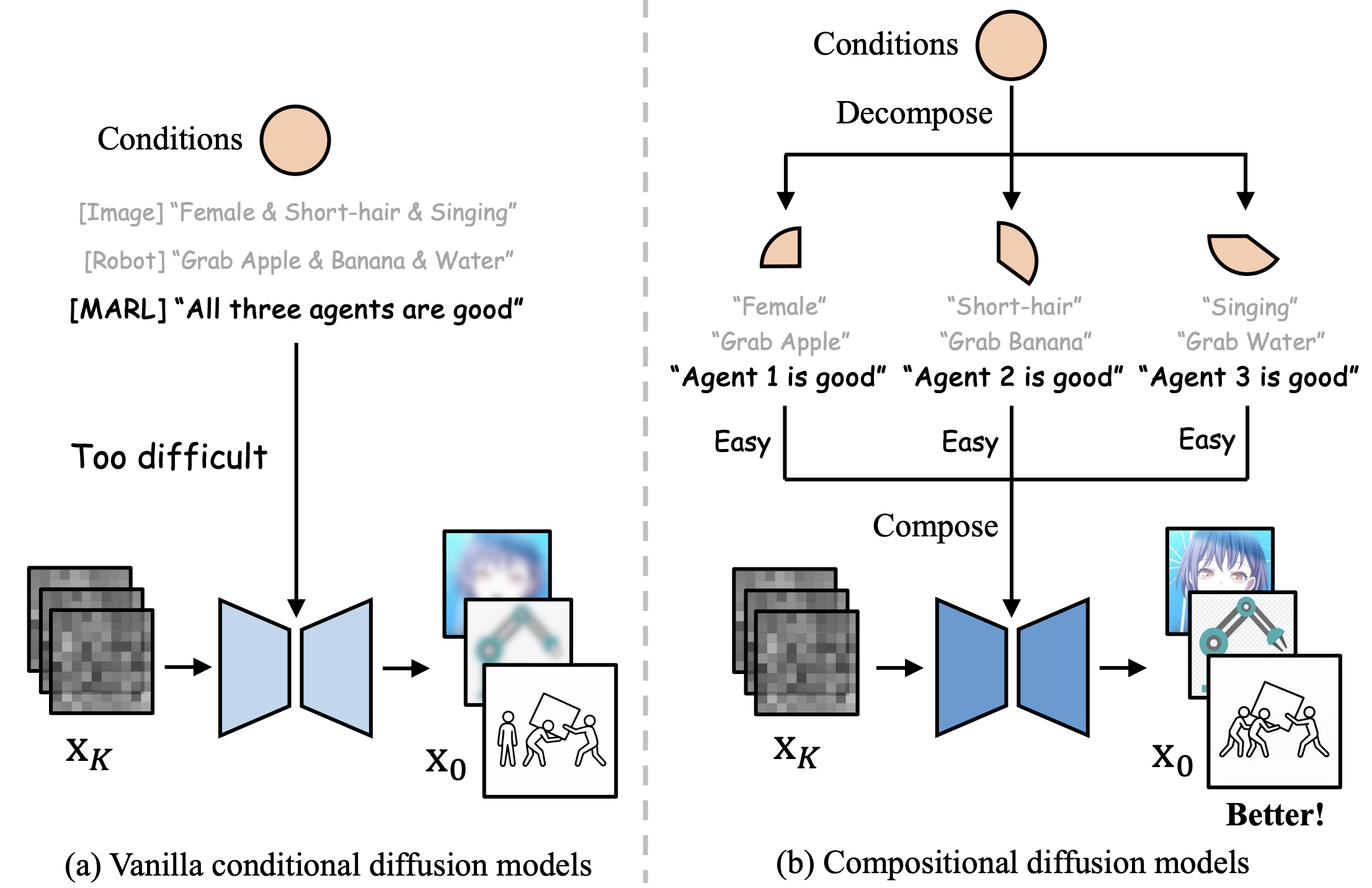

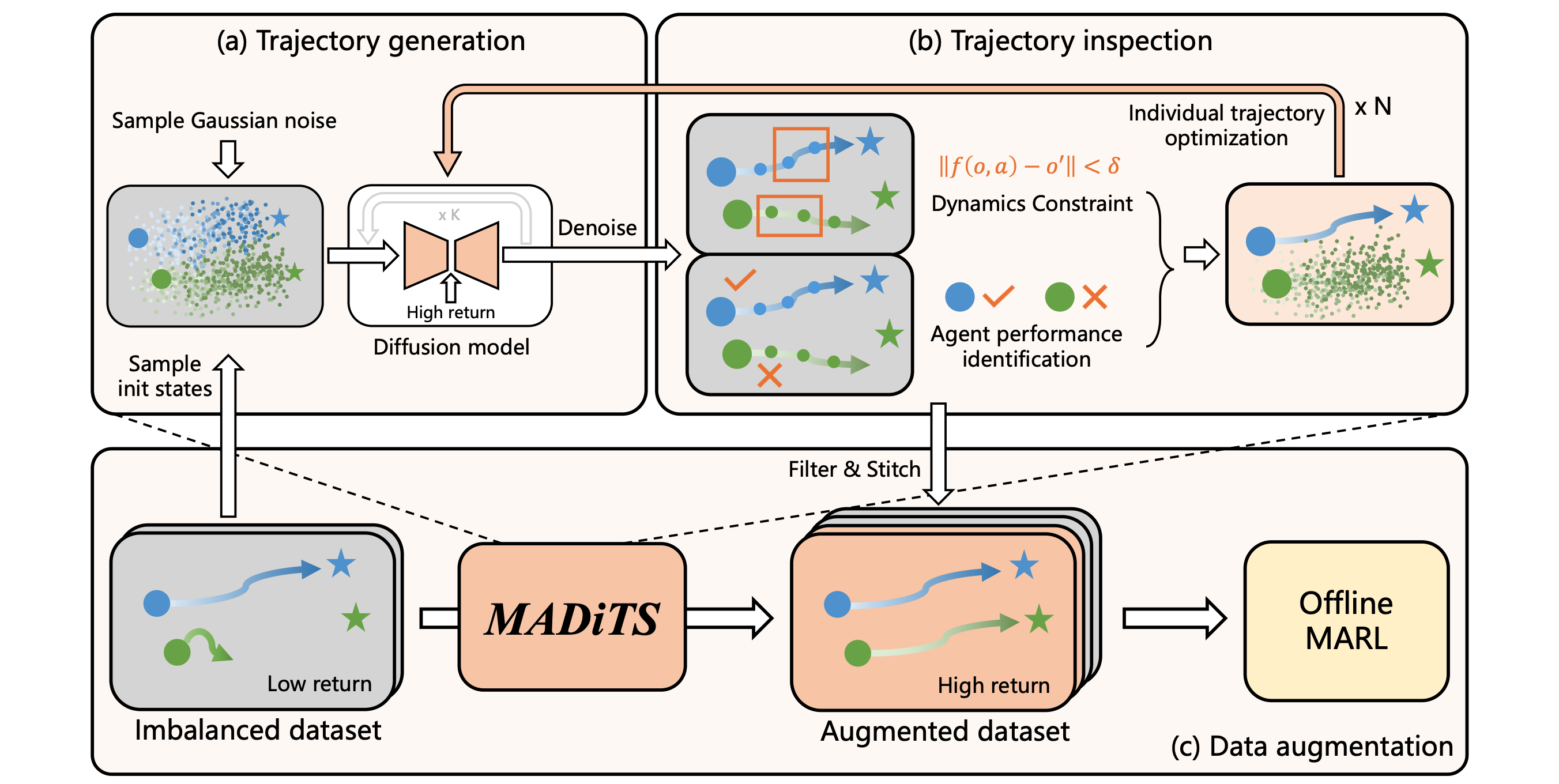

Lihe Li, Shenghe Hu, Bingxuan Lan, Yuqi Bian, Huan Zhang, Ming Zhao, Chongjie Zhang, Lei Yuan, Yang Yu The 25rd International Conference on Autonomous Agents and Multi-Agent Systems (AAMAS), 2026 pdf / bibtex CODI addresses agent-quality imbalance in offline MARL by using LLMs and a compositional diffusion model. It decomposes team-level quality targets into fine-grained agent labels to generate balanced, high-quality trajectory segments for data augmentation. This approach effectively mitigates data imbalance and enables the learning of strong cooperative policies. |

|

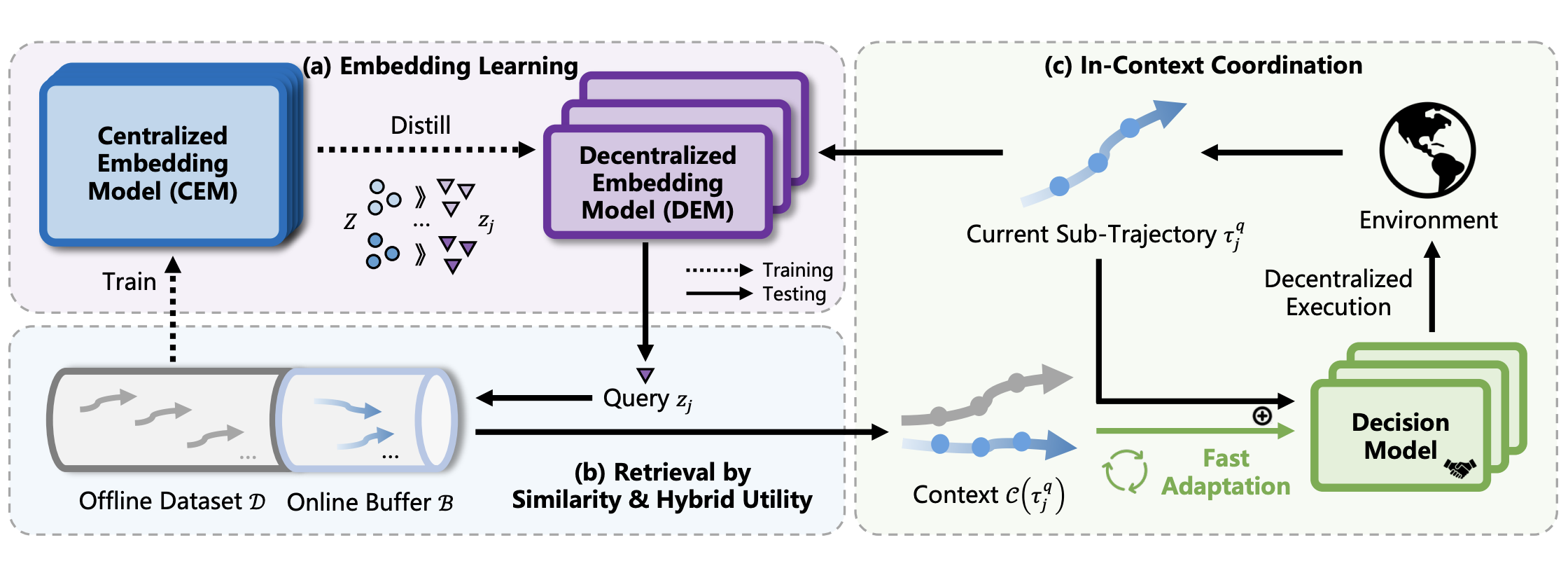

Tao Jiang, Zichuan Lin, Lihe Li, Yi-Chen Li, Cong Guan, Lei Yuan, Zongzhang Zhang, Yang Yu, Deheng Ye The 40th AAAI Conference on Artificial Intelligence (AAAI), Oral Presentation, 2026 bibtex MAICC enhances coordination in MARL by using a decentralized memory retrieval system to provide agents with relevant context from past trajectories, enabling faster adaptation to new cooperative tasks without parameter updates. |

| 2025 |

|

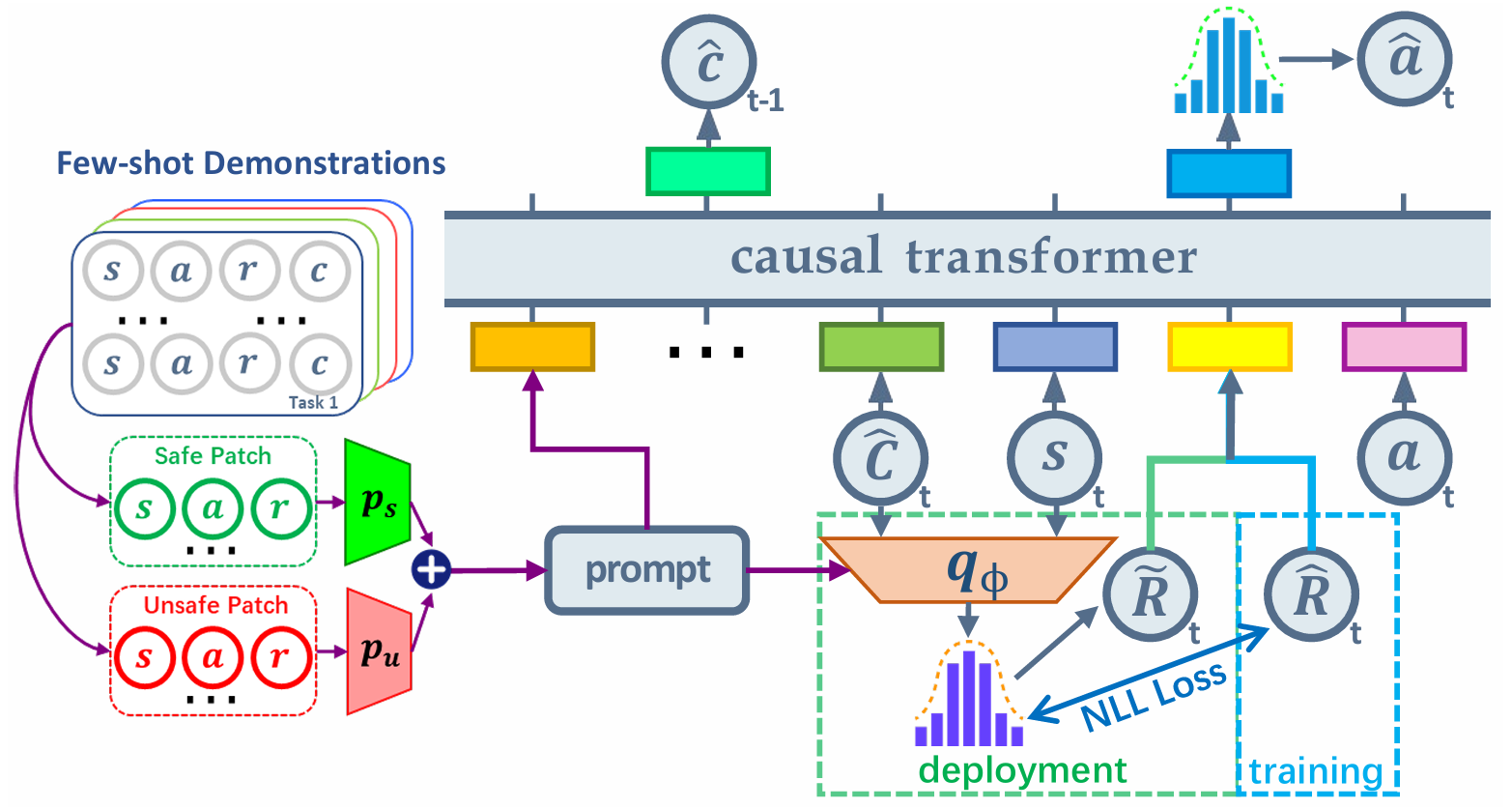

Ruiqi Xue, Ziqian Zhang, Lihe Li, Cong Guan, Lei Yuan, Yang Yu Advances in Neural Information Processing Systems 39 (NeurIPS), 2025 pdf / link / bibtex CoPDT enables safe offline RL by prioritizing constraints in Decision Transformers, achieving 2× higher safety compliance than baselines across tasks. |

|

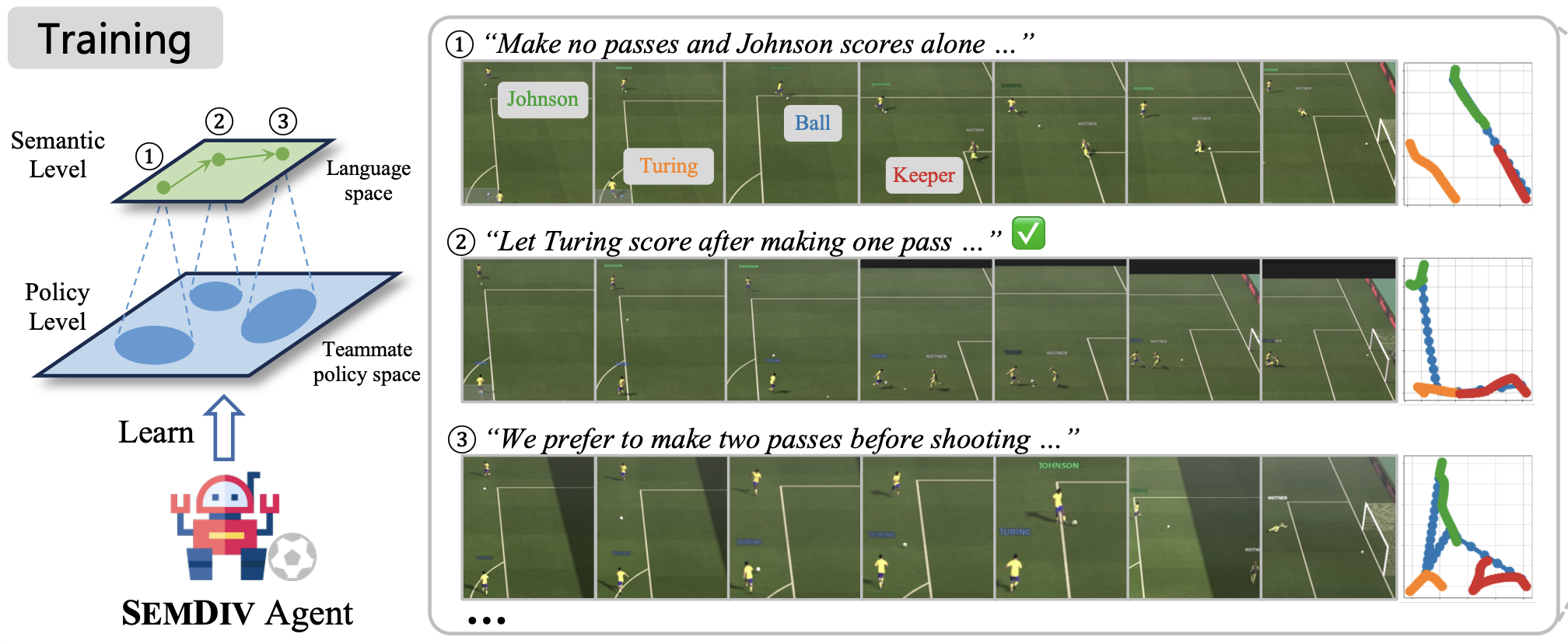

Lihe Li, Lei Yuan, Pengsen Liu, Tao Jiang, Yang Yu The 42rd International Conference on Machine Learning (ICML), 2025 pdf / link / code / blog / poster / bibtex Instead of discovering novel teammates only at the policy level, we utilize LLMs to propose novel coordination behaviors described in natural language, and then transform them into teammate policies, enhancing teammate diversity and interpretability, eventually learning agents with language comprehension ability and stronger collaboration skills. |

|

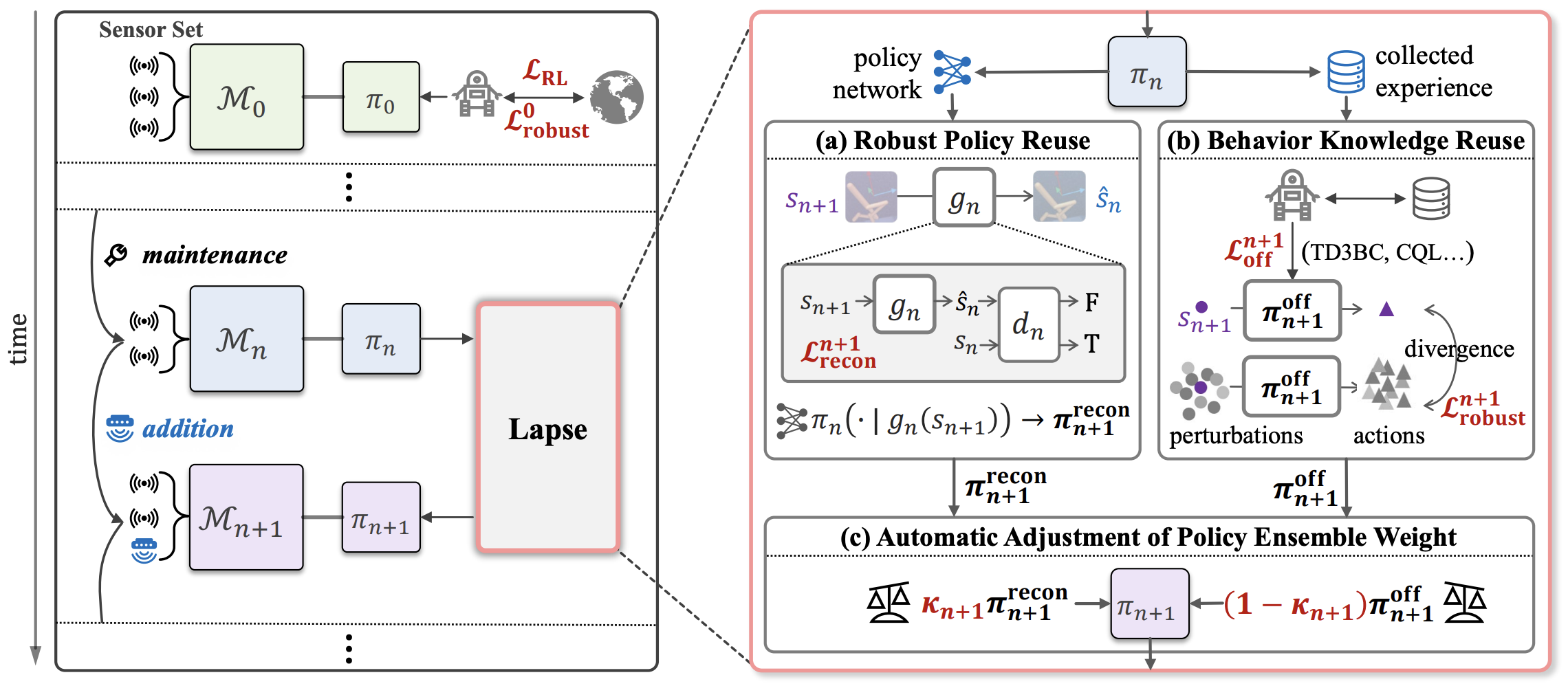

Ziqian Zhang, Bohan Yang, Lihe Li, Yuqi Bian, Ruiqi Xue, Feng Chen, Yi-Chen Li, Lei Yuan, Yang Yu The 42rd International Conference on Machine Learning (ICML), 2025 pdf / link / bibtex We addresse the performance degradation of RL policies when state features (e.g., sensor data) evolve unpredictably by proposing Lapse, a method that reuses old policies by combining them with a state reconstruction model for vanished sensors and leverages past policy experience for offline training of new policies. |

|

Lei Yuan, Yuqi Bian, Lihe Li, Ziqian Zhang, Cong Guan, Yang Yu The 13th International Conference on Learning Representations (ICLR), 2025 pdf / link / code / bibtex We propose a data augmentation technique for offline cooperative MARL, utlizing diffusion models to improve the quality of the datasets. |

|

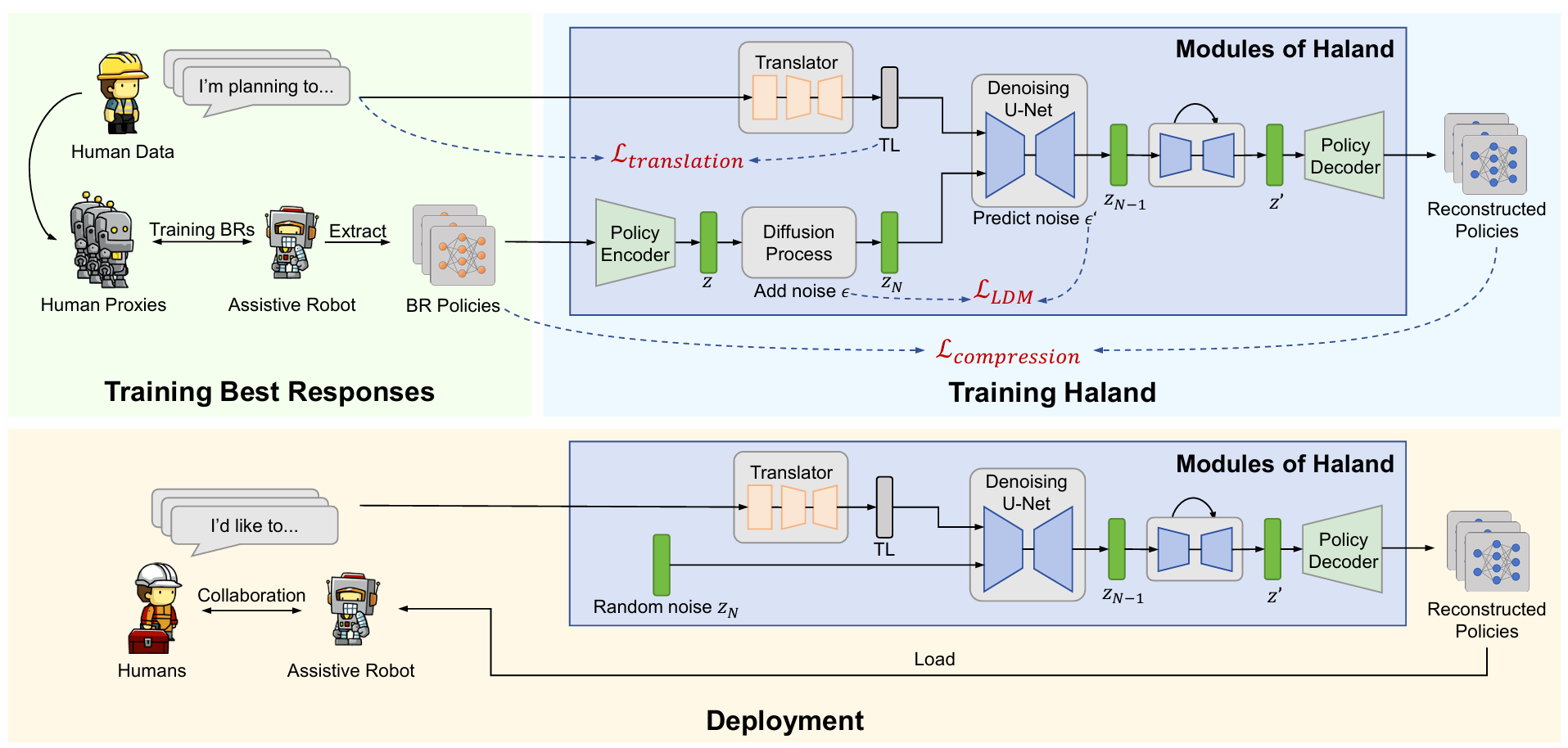

Lei Yuan, Kunmin Lin, Ziqian Zhang, Lihe Li, Feng Chen, Jingyu Ru, Cong Guan, Yang Yu Science China Information Sciences (SCIS) pdf / link / bibtex By compressing diverse best-response policies into a language-conditioned diffusion model, Haland efficiently aligns human preferences with AI behavior for seamless collaboration. |

| 2024 |

|

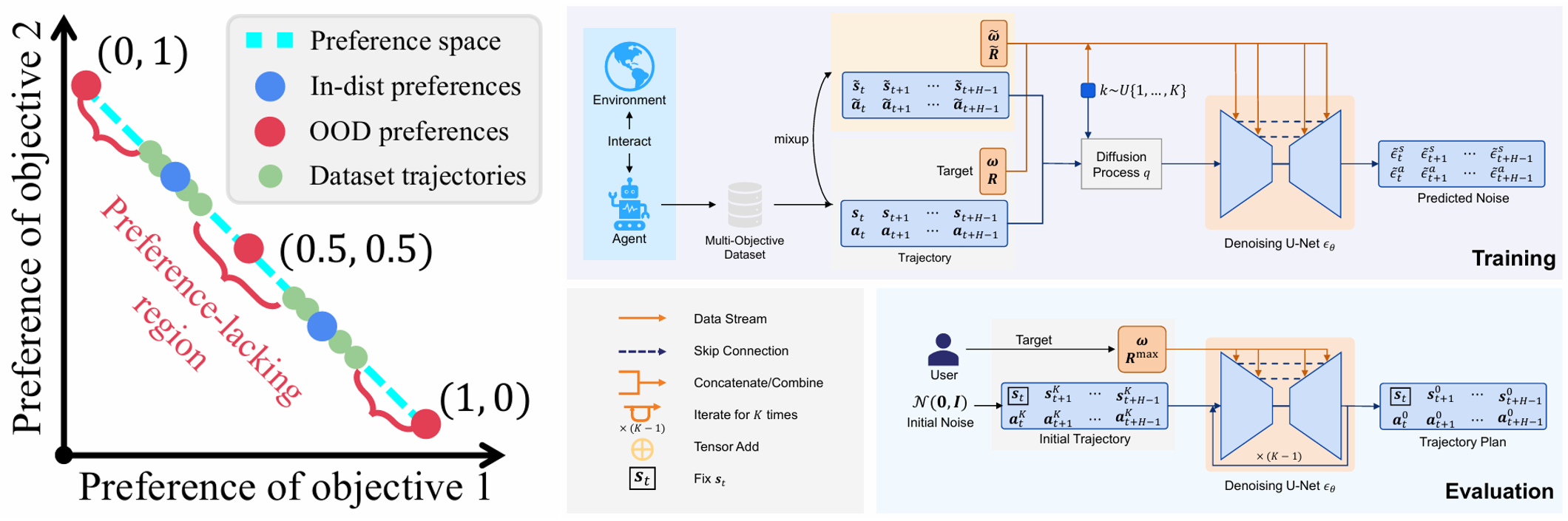

Yuchen Xiao, Lei Yuan, Lihe Li, Ziqian Zhang, Yi-Chen Li, Yang Yu IEEE Transactions on Neural Networks and Learning Systems (TNNLS) pdf / link / bibtex DiffMORL advances offline multi-objective RL with a diffusion-based planning framework, enhancing generalization via data mixup and outperforming baselines on OOD preferences. |

|

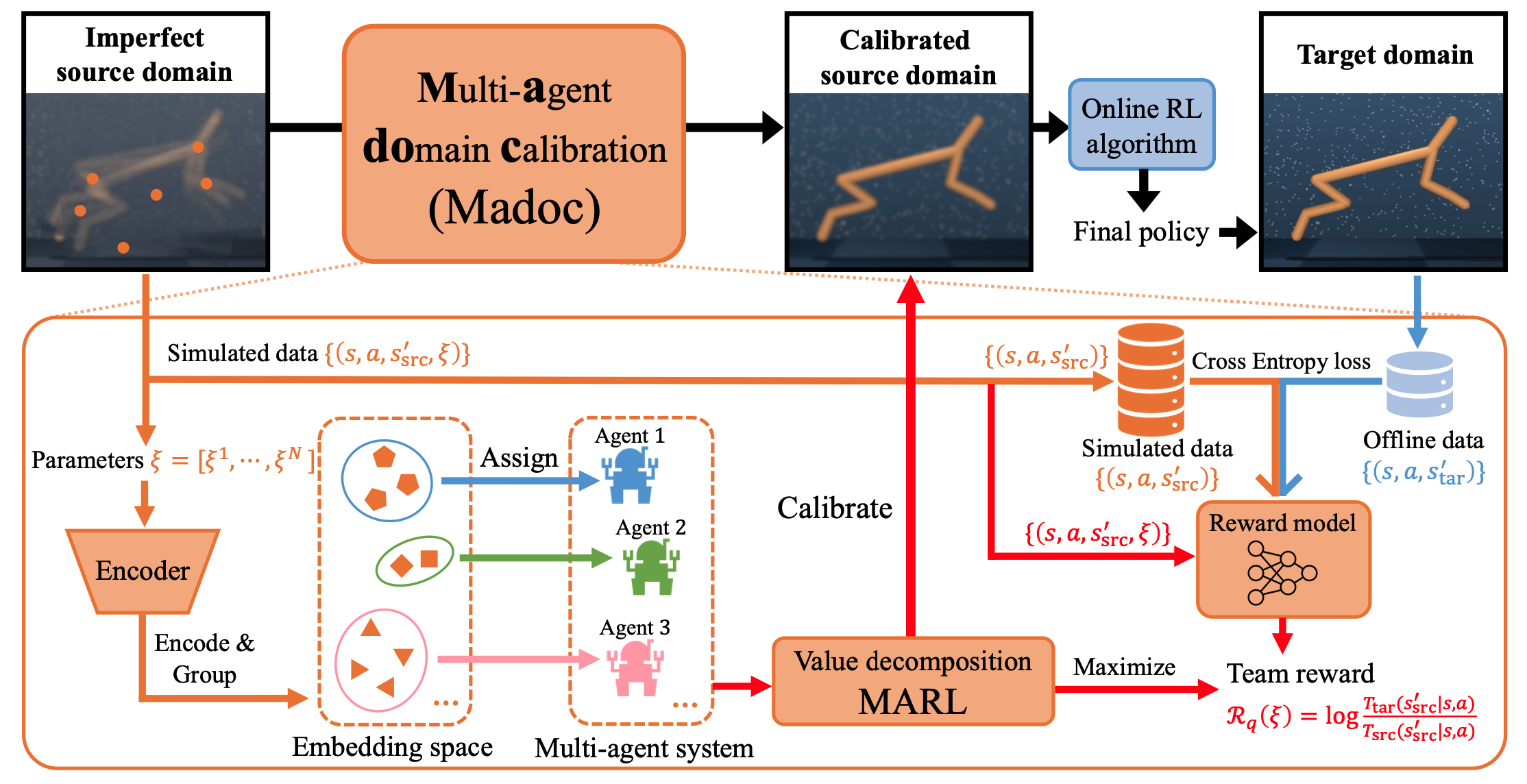

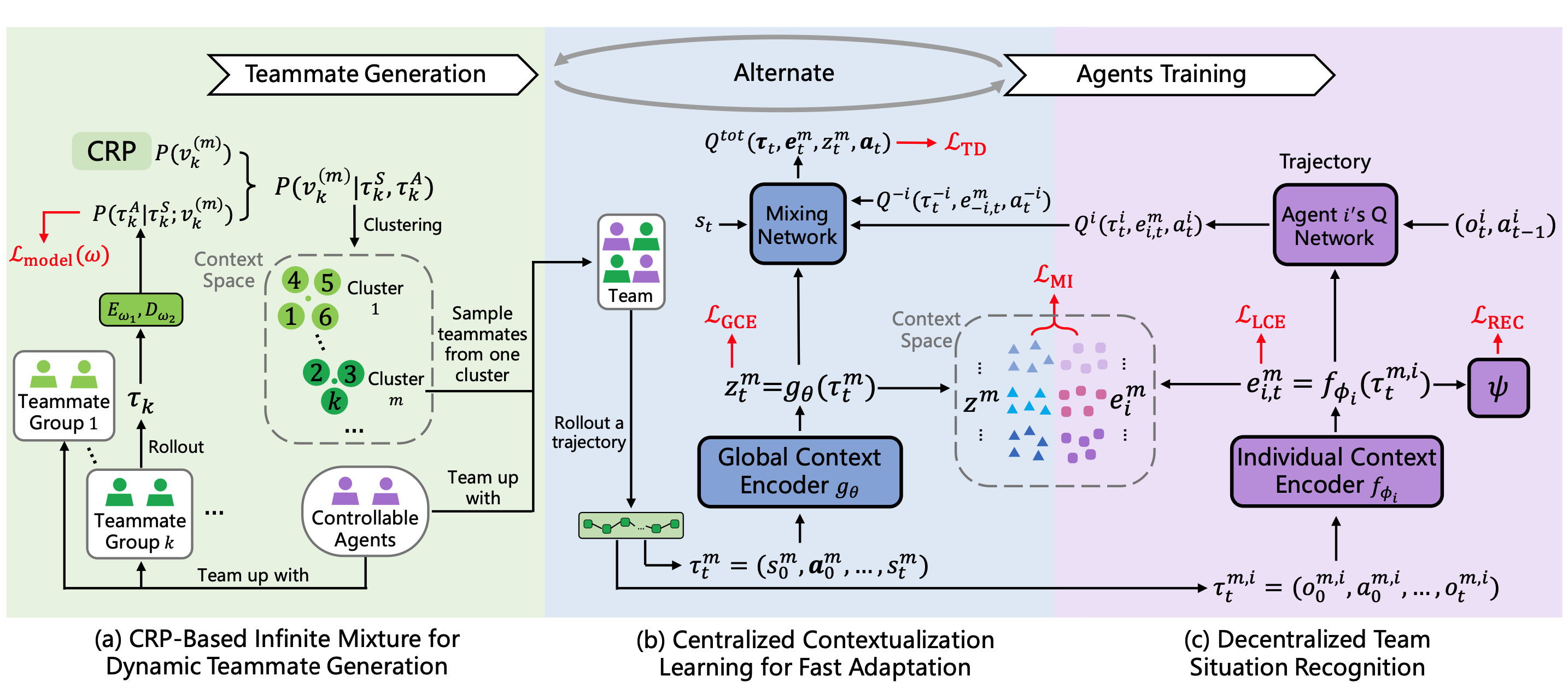

Tao Jiang, Lei Yuan, Lihe Li, Cong Guan, Zongzhang Zhang, Yang Yu Advances in Neural Information Processing Systems 38 (NeurIPS), 2024 pdf / link / code / bibtex We formulate domain calibration as a cooperative MARL problem to improve efficiency and fidelity. |

|

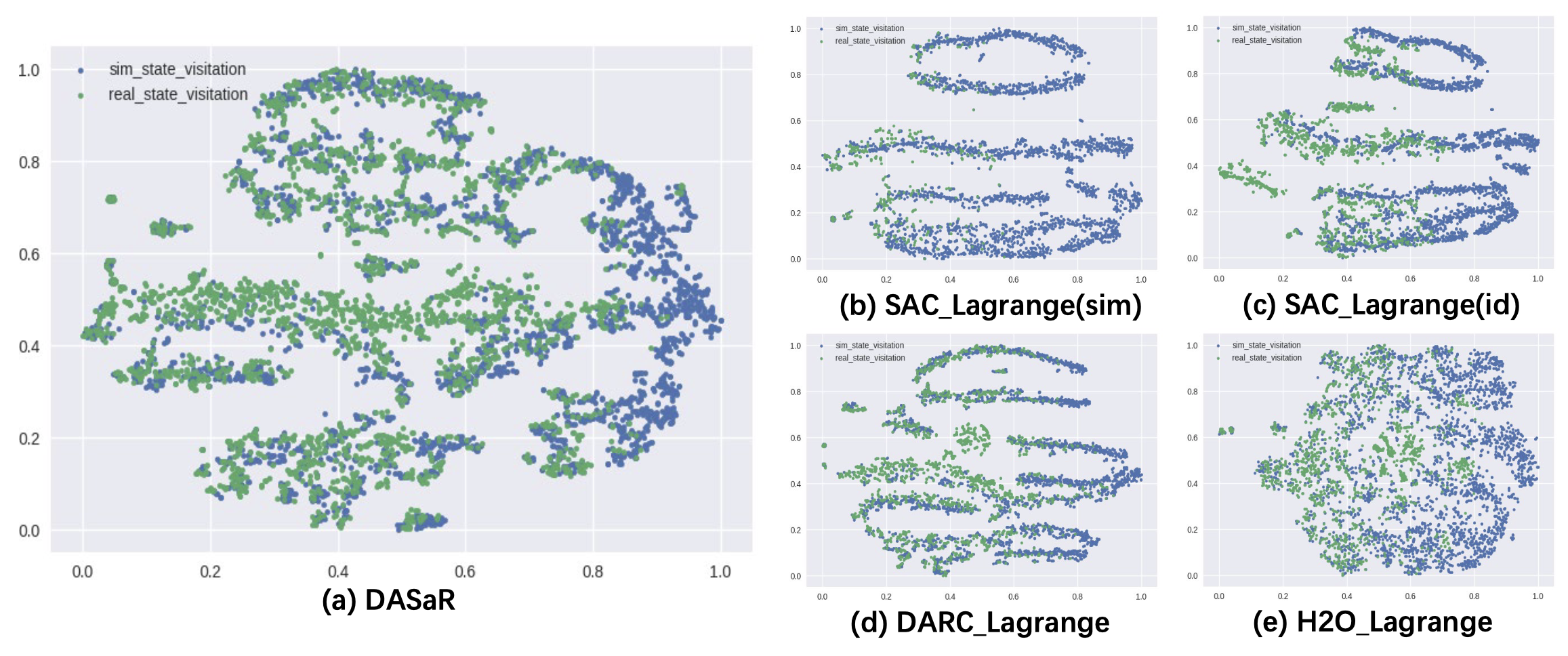

Ruiqi Xue, Ziqian Zhang, Lihe Li, Feng Chen, Yi-Chen Li, Yang Yu, Lei Yuan Joint European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), 2024 pdf / link / bibtex We propose DASaR, which expands the trust region in sim-to-real RL by aligning simulator and real-world value functions through inverse dynamics-based relabeling of rewards and costs. |

|

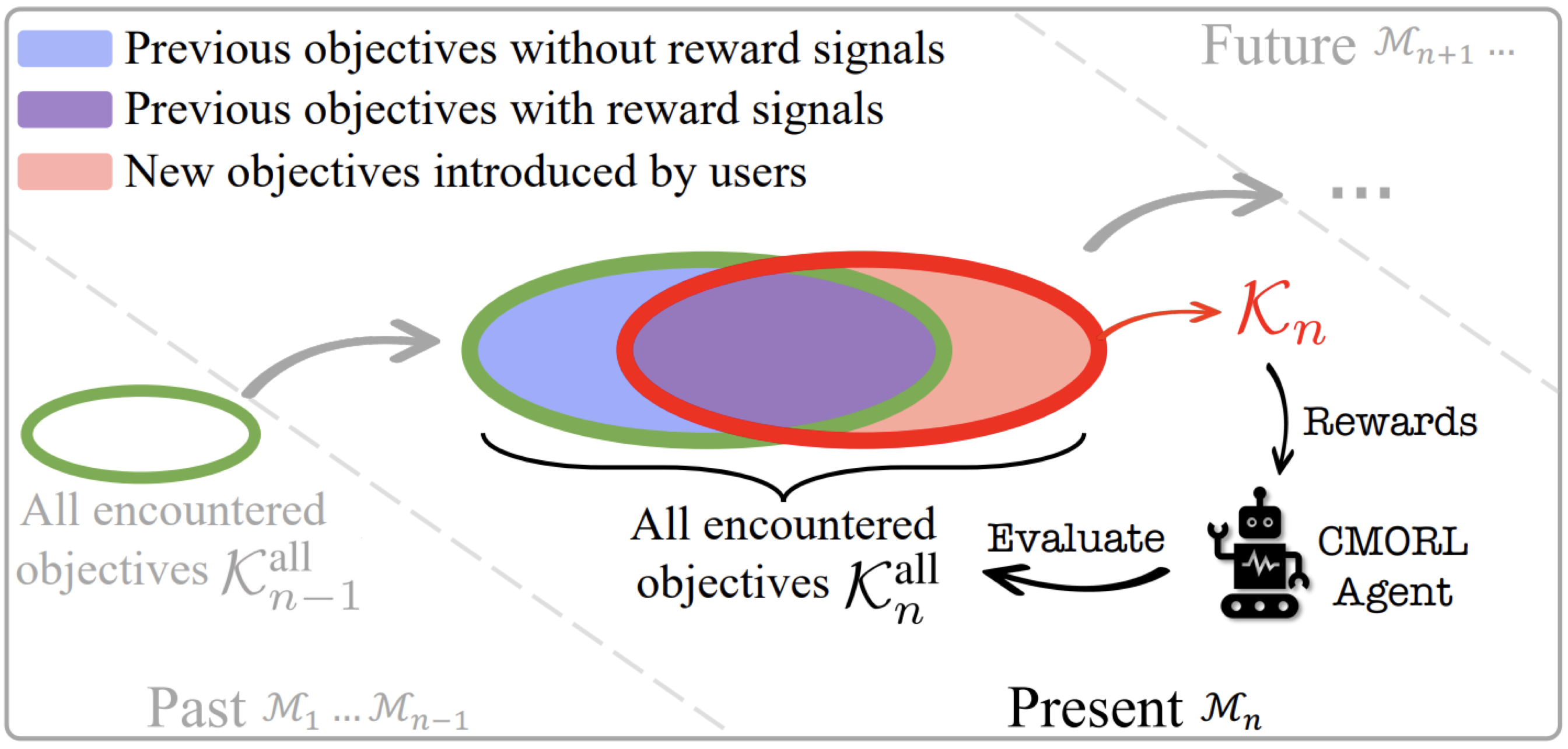

Lihe Li, Ruotong Chen, Ziqian Zhang, Zhichao Wu, Yi-Chen Li, Cong Guan, Yang Yu, Lei Yuan The 33rd International Joint Conference on Artificial Intelligence (IJCAI), 2024 pdf / link / code / talk / poster / bibtex We study the problem of multi-objective reinforcement learning (MORL) with continually evolving learning objectives, and propose CORe3 to enable the MORL agent rapidly learn new objectives and avoid catastrophic forgetting about old objectives lacking reward signals. |

|

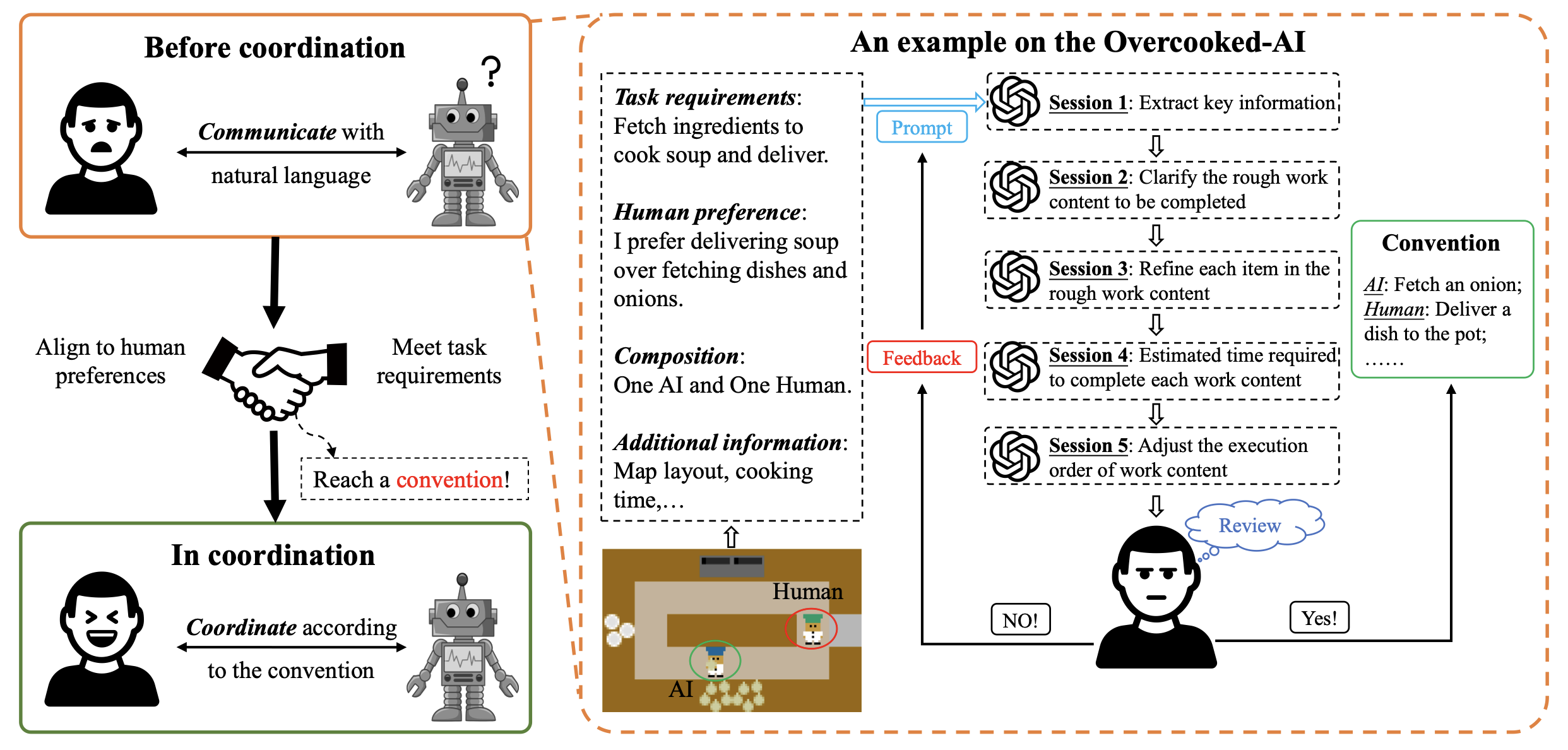

Cong Guan, Lichao Zhang, Chunpeng Fan, Yi-Chen Li, Feng Chen, Lihe Li, Yunjia Tian, Lei Yuan, Yang Yu The 12th International Conference on Learning Representations (ICLR), Workshop on Large Language Model (LLM) Agents, 2024 pdf / link / bibtex We propose employing the large language models (LLMs) to develop an action plan (or equivalently, a convention) that effectively guides both human and AI for coordination. |

|

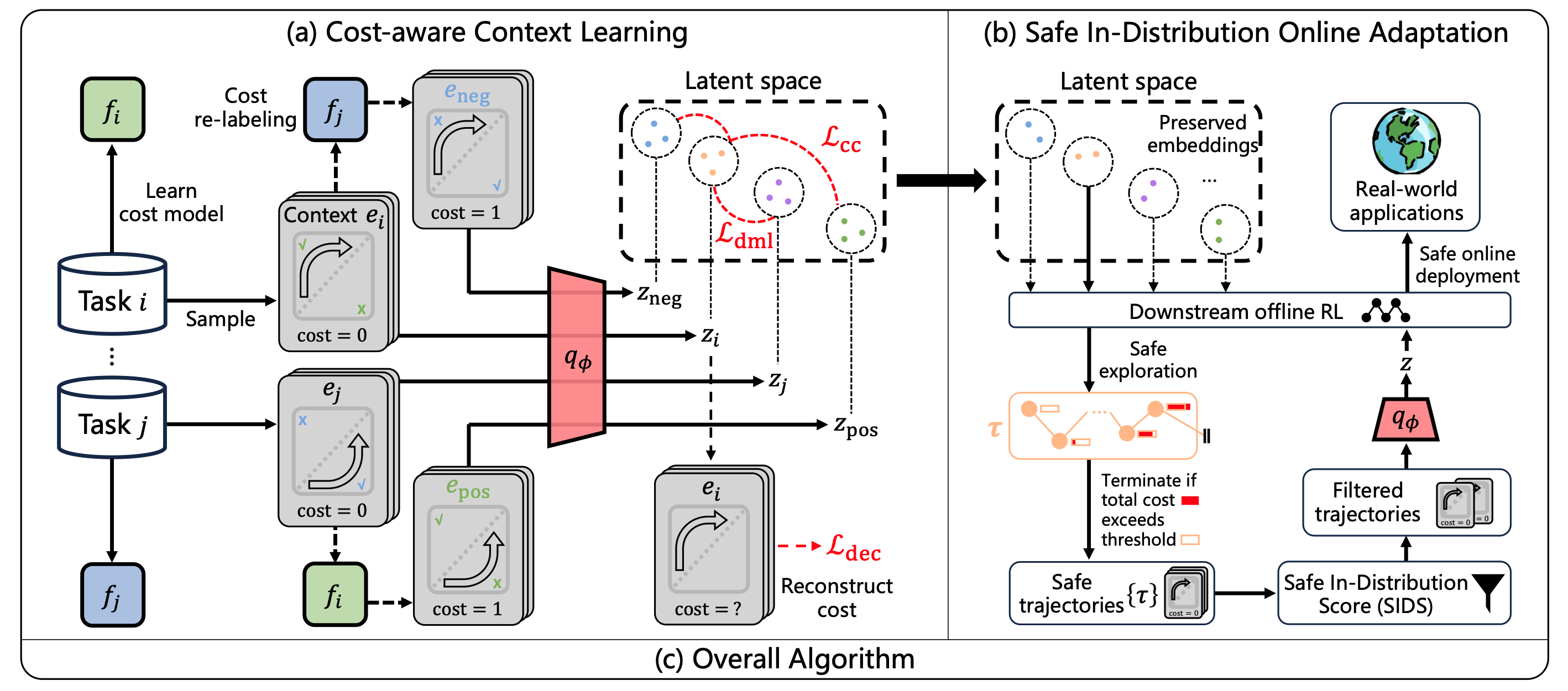

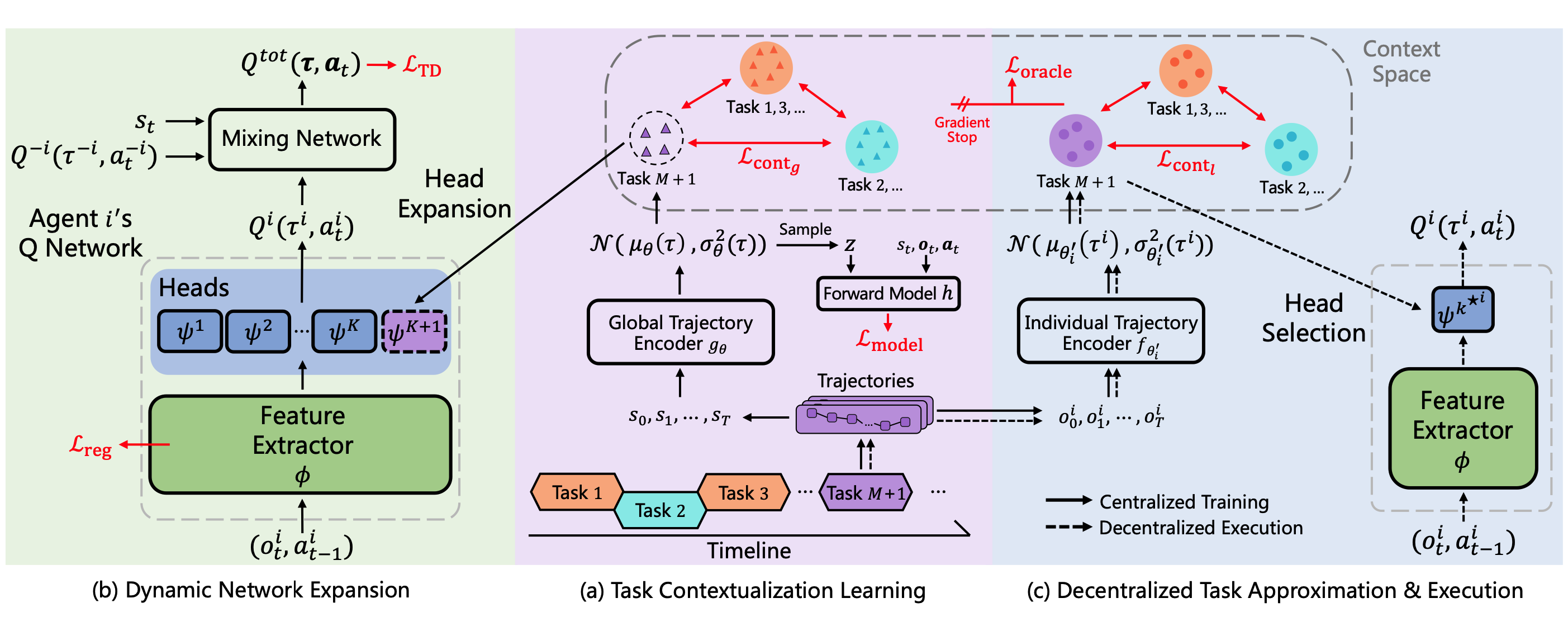

Cong Guan, Ruiqi Xue, Ziqian Zhang, Lihe Li, Yi-Chen Li, Lei Yuan, Yang Yu The 23rd International Conference on Autonomous Agents and Multi-Agent Systems (AAMAS), 2024 pdf / link / code / poster / bibtex We propose COSTA to deal with offline safe meta RL problems. We develope a cost-aware task inference module using contrastive learning to distinguish tasks based on safety constraints, and propose a safe in-distribution online adapation mechanism. |

| 2023 |

|

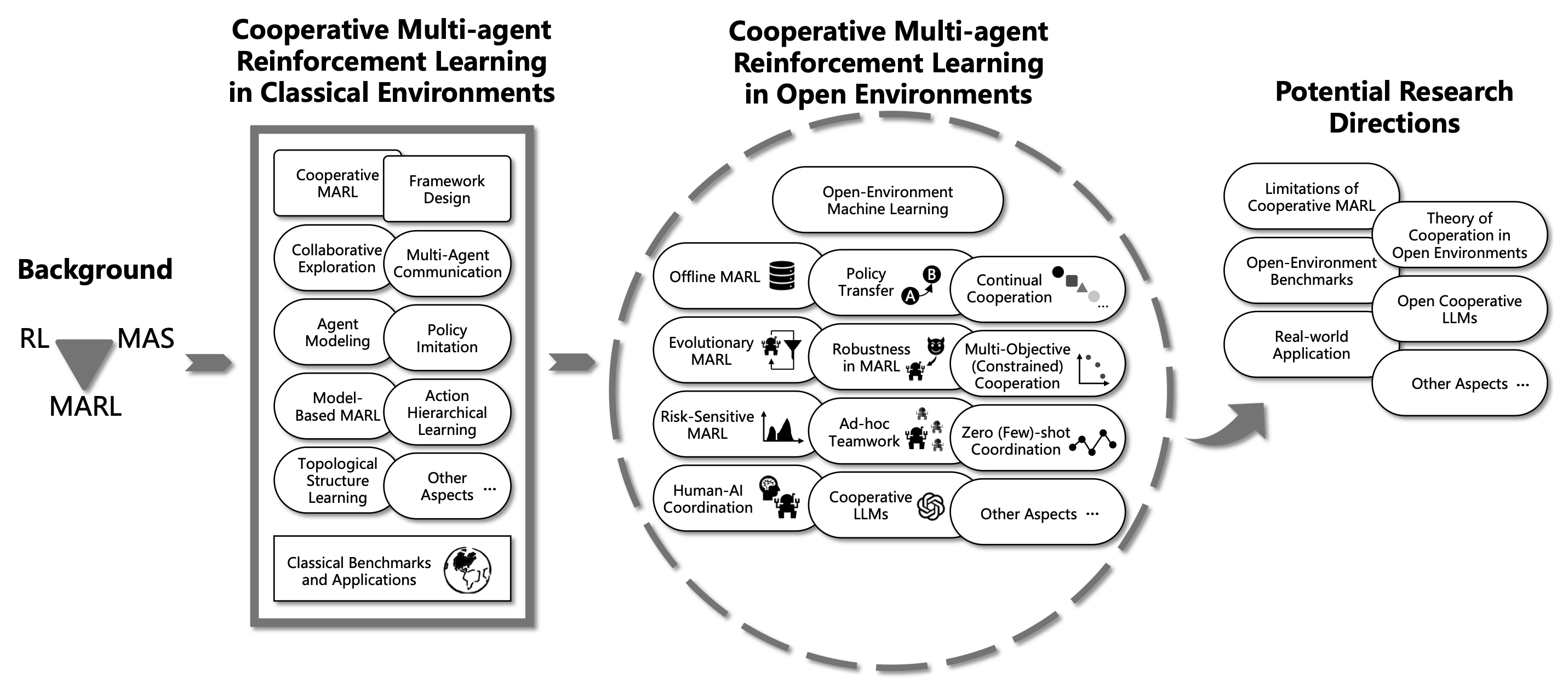

Lei Yuan, Ziqian Zhang, Lihe Li, Cong Guan, Yang Yu Science China Information Sciences (SCIS) pdf in English / pdf in Chinese / link / bibtex We review multi-agent cooperation from closed environment to open environment settings, and provide prospects for future development and research directions of cooperative MARL in open environments. |

|

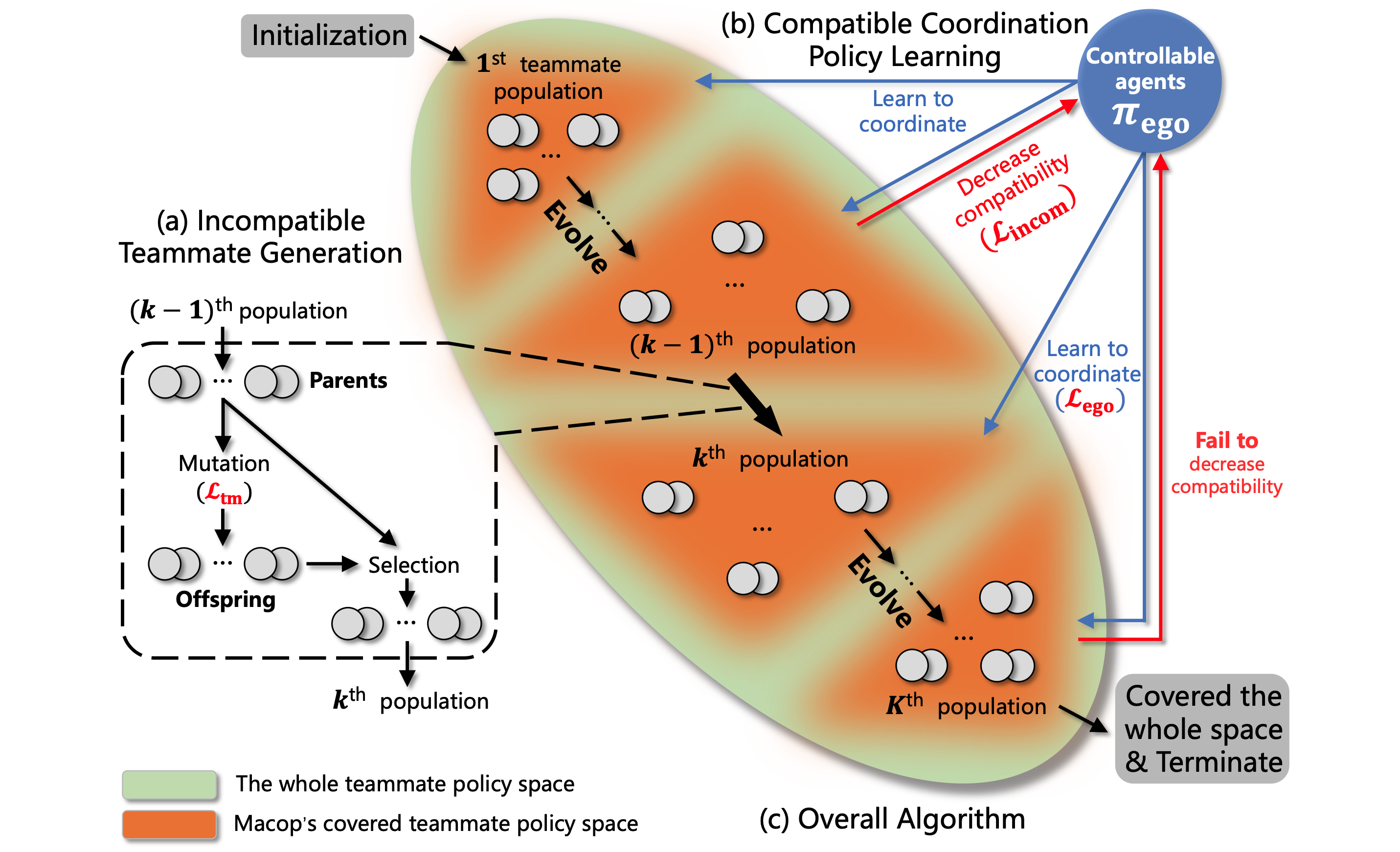

Lei Yuan, Lihe Li, Ziqian Zhang, Feng Chen, Tianyi Zhang, Cong Guan, Yang Yu, Zhi-Hua Zhou Proceedings of the Fifth International Conference on Distributed Artificial Intelligence (DAI), Best Paper Award, 2023 pdf / link / code / English talk / Chinese talk / bibtex We propose Multi-agent Compatible Policy Learning (MACOP), where we adopt an agent-centered teammate generation process that gradually and efficiently generates diverse teammates covering the teammate policy space, and we use continual learning to train the ego agents to coordinate with them and acquire strong coordination ability. |

|

Ziqian Zhang, Lei Yuan, Lihe Li, Ke Xue, Chengxing Jia, Cong Guan, Chao Qian, Yang Yu The 39th Conference on Uncertainty in Artificial Intelligence (UAI), 2023 pdf / link / poster / bibtex We formulate Open Dec-POMDP and propose Fast teammate adaptation (Fastap) to enable controllable agents in a multi-agent system to fast adapt to the uncontrollable teammates, whose policy could be changed with one episode. |

|

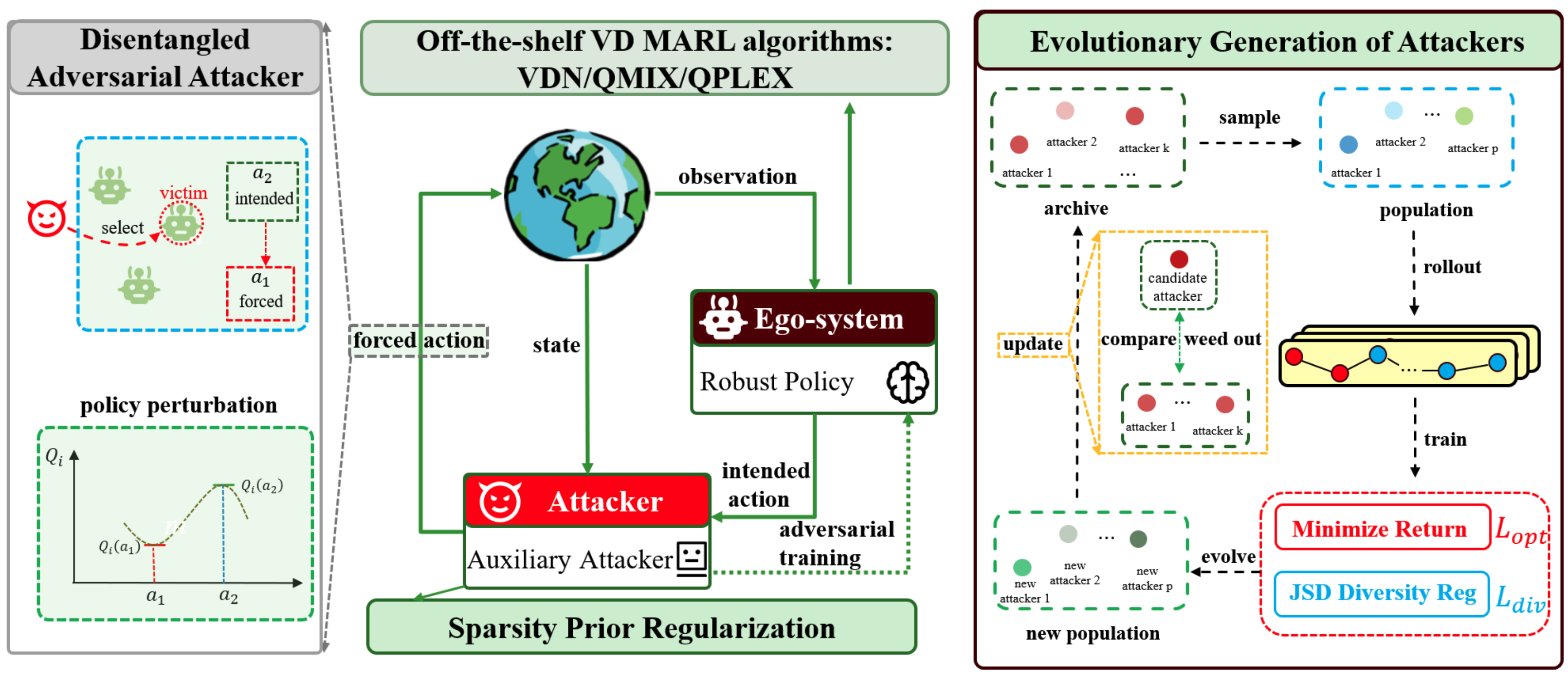

Lei Yuan, Ziqian Zhang, Ke Xue, Hao Yin, Feng Chen, Cong Guan, Lihe Li, Chao Qian, Yang Yu The 37th AAAI Conference on Artificial Intelligence (AAAI), Oral Presentation, 2023 pdf / link / code / poster / bibtex We formulate Limited Policy Adversary Dec-POMDP and propose ROMANCE to enable the trained agents to encounter diversified and strong auxiliary adversarial attacks during training, achieving high robustness under various policy perturbations. |

|

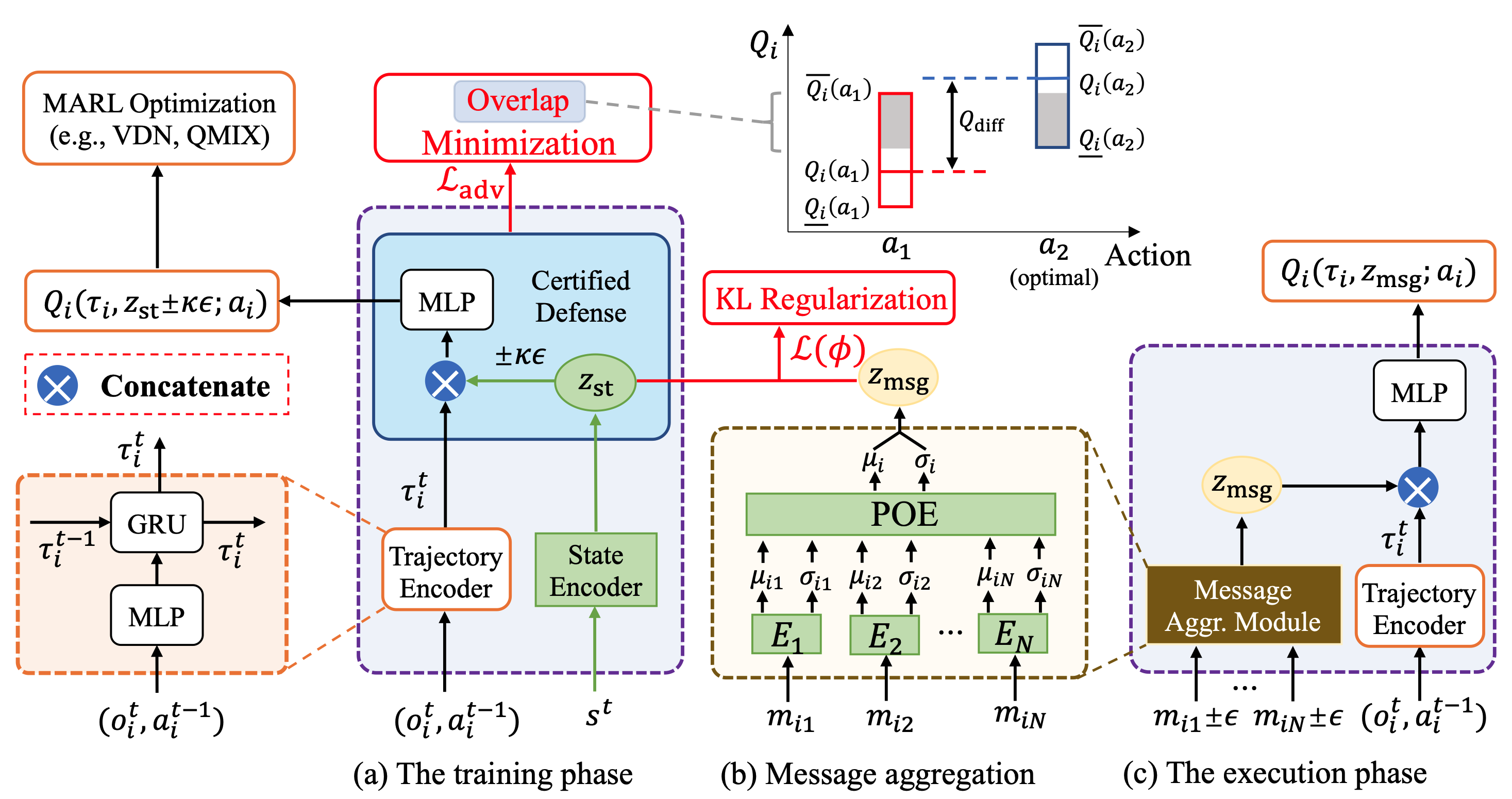

Lei Yuan, Tao Jiang, Lihe Li, Feng Chen, Zongzhang Zhang, Yang Yu Science China Information Sciences (SCIS) pdf / link / code / poster / bibtex We propose CroMAC to enable agents to obtain guaranteed lower bounds on state-action values to identify and choose the optimal action under a worst-case deviation when the received messages are perturbed. |

|

Lei Yuan, Lihe Li, Ziqian Zhang, Fuxiang Zhang, Cong Guan, Yang Yu IEEE Transactions on Neural Networks and Learning Systems (TNNLS) pdf / link / code / poster / bibtex We formulate the continual coordination framework and propose MACPro to enable agents to continually coordinate with each other when the dynamic of the training task and the multi-agent system itself changes over time. |